Overview:

If you are a developer and facing problems using AI models in the applications due to the large sizes of the models, This model conversions guide is for you. In most mobile applications, we need real-time results, and due to the large size of the models and real-time processing, phone’s memory gets full which results in a lag in the live streams, applications, or phones.

To avoid such frustrating situations, we use lightweight models in mobile applications. In this article, I’ll shed light on how to convert some of the most used models into mobile applications supported formats.

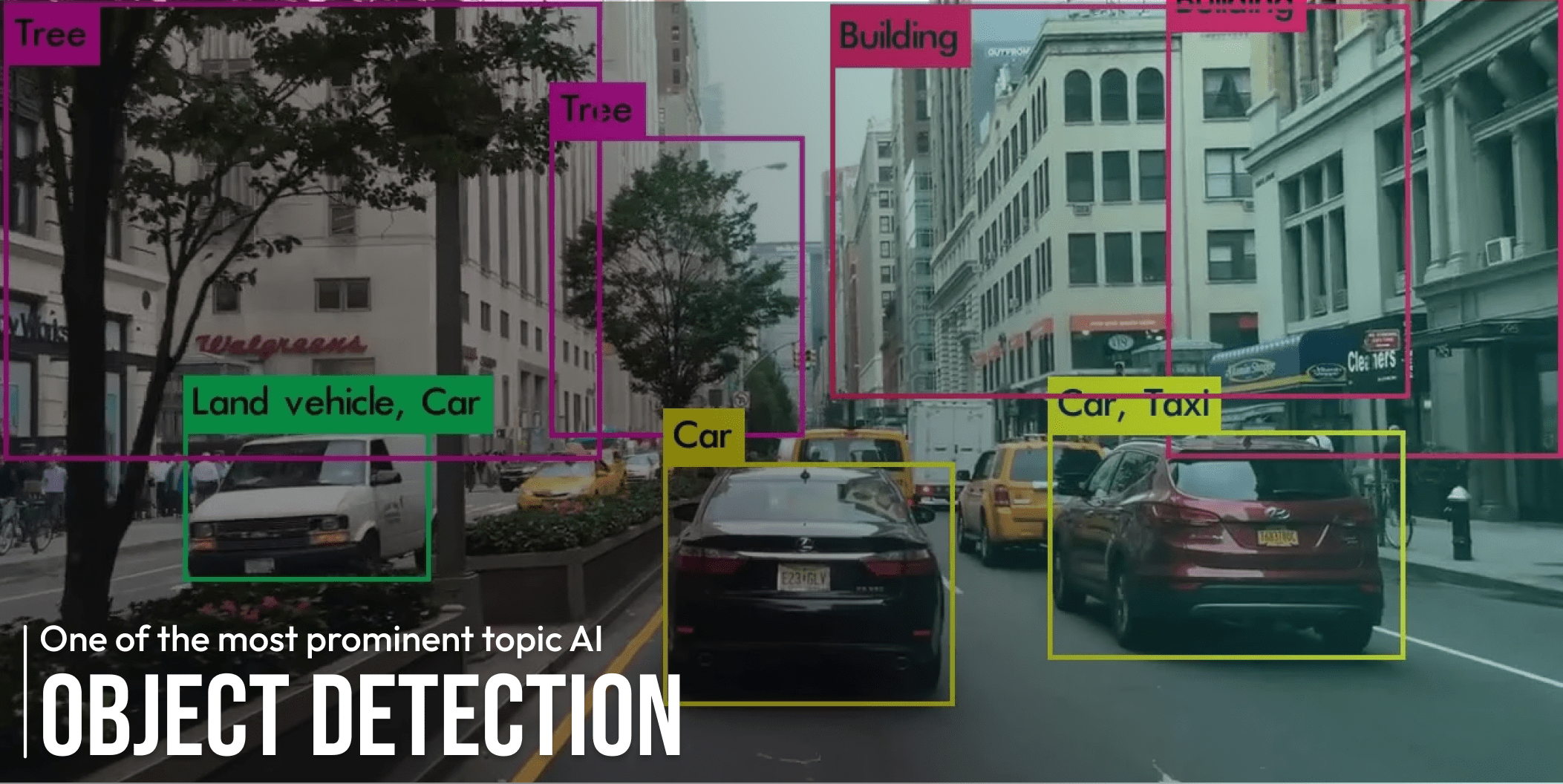

In the previous article, I discussed some basics of YOLO Algorithm and how it is used in Real-time Object Detection. In this article, I will be covering the following:

- Conversion of YOLOv5 model to TensorFlow formats and many others.

- Conversion of YOLOv3 model to TensorFlow Saved_model, TensorFlowLite, and TensorFlow JS

Problem:

While building web/mobile applications which use Artificial Intelligence we need models to perform AI. It includes object detection, object classification, and more. The size of the models can be huge due to which it can be complicated to use these models in the mobile application.

The problem team faced was very similar to this and then here comes the role of model conversion. In mobile applications, most of the models we can use are of tiny sizes for which we convert the large models to small ones.

Conversion of YOLOv5 model to TensorFlow

We can easily convert the yolov5 PyTorch models into different models as YOLOv5 officially supports these conversions and provide a python file for conversion.

After you have trained your model you will have a model file with a ‘.pt’ extension, you can follow these simple steps to convert the model into different formats.

Step:01

Clone the YOLOv5 repository and install requirements.txt, Following are the commands:

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # install

Step:02

Place the model file in the folder where you cloned the repository.

Here I need to introduce some parameters of the file export.py:

–weights: The path of the model’s weight file.

–include: Format in which you want to convert your model.

Step:03

As in my case, the model name is ‘yolov5s.pt’ and I am converting the model into tensorflow.js.

Now open the terminal in the folder and enter the following command:

python export.py –weights yolov5s.pt –include tfjs

After this you will see the output like this:

Here, as we can see, the line at the end shows our model has been exported successfully and it will create a folder with the model’s name, including web_model. After checking in the folder it just created we can find our converted model.

YOLOv5 supported conversion formats are:

- TorchScript(torchscript)

- ONNX(onnx)

- OpenVino(openvino)

- TensorRT(engine)

- CoreML(coreml)

- TensorFlow SavedModel(saved_model)

- Tensorflow GraphDef(pb)

- Tensorflow Lite(tflite)

- Tensorflow Edge TPU(edgetpu)

- Tensorflow.js(tfjs)

For converting the model into these formats we just need to change the ‘–include’ parameter with the model name. After that, we can have our converted model in any format.

Conversion of YOLOv3 model to TensorFlow:

Here We will be discussing conversion of YOLOv3 model to TensorFlow formats. Firstly we need to convert our model to TensorFlow saved_model format then we will convert them to another TensorFlow format like TensorFlow Lite and TensorflowJs. TensorFlow provides support for the conversion of saved_model to different formats.

Step:01

Clone the repository and install the requirements with the commands below:

git clone https://github.com/Abdulmannan112233/Yolo_v3_Tensorflow.git

cd Yolo_v3_Tensorflow

pip install requirements.txt

Step:02

Place your weight file along with coco.names and Configuration file(cfg)in the directory.

Step:03

Run the following command:

python convert_weights_pb.py

You can also use –weights_file and –class_names parameters to specify the path of the weight file and coco.names file. You can see more information about these parameters in the readme file on GitHub.

This command will convert the model in TensorFlow saved_model format and you will see the output like this:

Now you can convert this saved_model to different formats in these simple steps.

Converting saved_model to Tensorflow Lite:

The command below can convert your saved_model to Tensorflow Lite:

tflite_convert –saved_model_dir=saved_model/ –output_file yolo_v3.tflite –saved_model_signature_key=’predict’

After running the command you will see the output like this:

Converting saved_model to Tensorflow JS:

To convert saved_model to Tensorflow.js we need to create another environment as the dependencies will conflict because in the above conversion we used TensorFlow v1 and to convert the saved_model to Tensorflow js we need Tensorflow v2. I will provide requirements for this conversion with the filename of ‘requirements_for_tensorflowjs.txt’ in the Github link I provided in the above conversion. You can use the command below to start conversion.

tensorflowjs_converter model/ result –signature_name predict

After running the command you can see the output like this:

In the above command ‘model/’ is the folder path where our saved_model.pb is present and ‘result’ is the folder name where we need our tfjs model to be saved.

You can also add another parameter ‘–quantization_bytes’.This parameter defines how many bytes to optionally quantize/compress the weights to. Valid values are 1 and 2. which will quantize int32 and float32 to 1 or 2 bytes respectively. The default (unquantized) size is 4 bytes.

Conclusion:

In the above, we took a look to convert the most used Yolo Object Detection Models to TensorFlow and many other formats. I hope after reading the article you can also perform some model conversions.

In the next part, I will be trying to convert some classification models to TensorFlow format. So, remain connected.